Splitgraph has been acquired by EDB! Read the blog post.

Using Dagster with Seafowl

Import the result of your data pipeline into Seafowl easily using Dagster!

Why Seafowl

Seafowl is a database designed to power data-driven Web apps like dashboards and interactive visualizations. Its cache-friendly HTTP API is optimized for clients sending SQL queries directly from the browser. As a single-binary database (built in Rust with DataFusion and delta-rs), it's easily deployable to multi-region cloud functions that run "at the edge" and query columnar data in object storage.

Of course, before Seafowl can answer clients' SQL queries, it must have access to the data in question. For Parquet or CSV data accessible over HTTP, Seafowl's external tables feature enables querying without any preceding import step.

For everything else, the data must be uploaded into Seafowl. In this blog post, we'll demonstrate how to populate a Seafowl table using Dagster, the hip new Pythonic framework that excels at creating declarative and reactive data pipelines.

Why Dagster

Dagster solves the problem of unmaintainable data pipelines, by providing a framework for defining them with Python functions that you can run from the command line or a web UI. It doesn't require too much ceremony or "activation energy" to start using it, so even the simplest Python scripts or dbt jobs can start life as a Dagster asset and benefit from features like parallel processing of data on multiple nodes. You can add complexity if and when it becomes required.

If you want to run Dagster on your own, you can: it's open source. But Dagster Cloud is also available, so the same Python codebase can grow from a proof-of-concept on a developer's laptop to a production data pipeline powering applications gradually, without full rewrites.

Inserting data into Seafowl from a Dagster pipeline

Here's how easy it is to append an "upload to Seafowl" step to the end of the Hacker News data pipeline from the canonical "Hello Dagster" example:

@op

def export_to_seafowl(

context, config: ExportToSeafowlConfig, data: pd.DataFrame

) -> None:

context.log.info("exporting to seafowl")

conn = SeafowlConnectionParams(

url=config.url,

secret=config.secret,

database=None)

destination = QualifiedTableName(schema="public", table=config.table)

dataframe_to_seafowl(data, conn, destination)

Check out the demo project repository to follow along as we load the data used in the official Hello Dagster example into Seafowl!

3-minute setup

Seafowl

If you don't already have a running Seafowl instance, head over to the Seafowl Releases page and download the appropriate build for your platform.

Move the seafowl binary into the demo repo's folder prior to execution to use the provided seafowl.toml configuration.

Python and Dagster setup

You will also need a Python installation containing Dagster and the Python Seafowl client.

The following commands create such a virtualenv for you:

python3 -m venv venv

# Note: the following command is required before running anything installed in the virtualenv

. venv/bin/activate

pip install -e 'git+https://git@github.com/splitgraph/seafowl.git@main#egg=seafowl[pandas]&subdirectory=examples/clients/python'

pip install dagster dagit

Starting Dagster

To start the Dagster UI (called "Dagit"), run the following:

dagster dev -f demo.py

Once the UI is running, the assets must be materialized before the data can be sent to Seafowl:

After the assets materialize, it's time to configure and run the hn_stories_to_seafowl_pipeline job:

Querying Seafowl

The Python Seafowl Client library can be used from the command line to verify the data has been successfully written to Seafowl by the Dagster job:

PASSWORD="iHBHflYfEaMpX4j7Du0z6vFNS6w2BMaX" ENDPOINT="http://127.0.0.1:8080/q" python -m seafowl "SELECT * FROM hn"

Other ways to load data into Seafowl

As convenient as Dagster is, you might not need an ETL pipeline if your data is already in a Splitgraph repository.

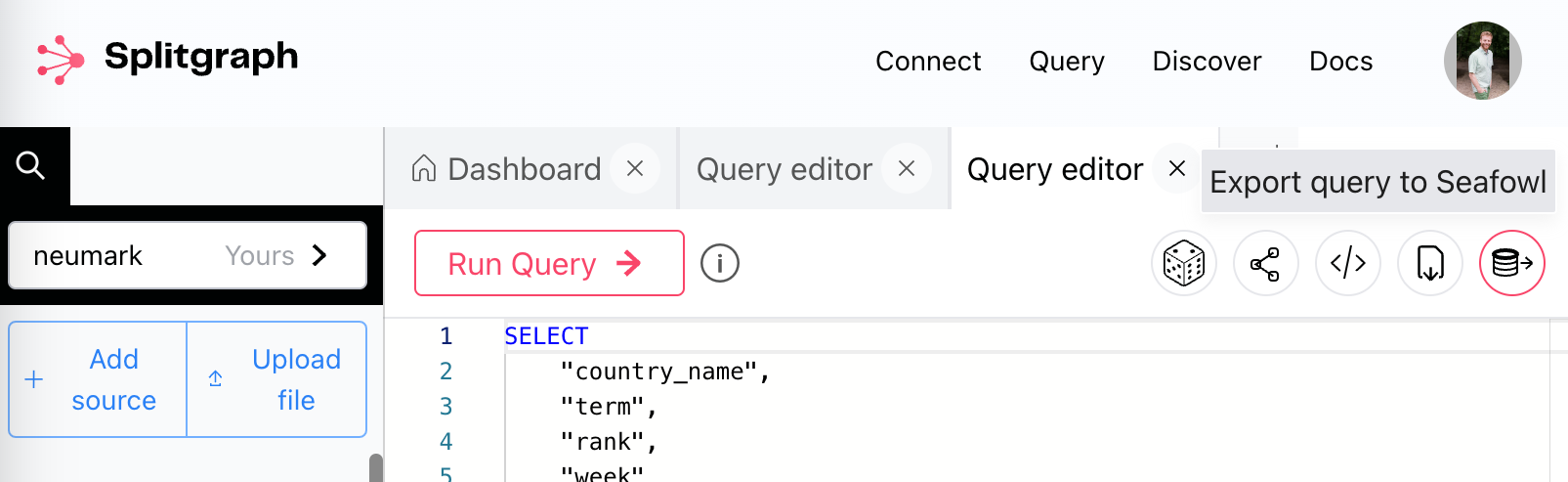

You can export query results directly from Splitgraph to Seafowl. Just click the button with a database icon in the Splitgraph Console:

You can import CSV or Parquet files into Seafowl by uploading them via HTTP.

In the above demo, the Dagster job invokes the Python Seafowl Client to upload a Pandas DataFrame into Seafowl, which can be used directly from custom Python code.

For TypeScript users, the madatdata library provides a polished API for querying and importing data into Seafowl.

Seafowl also includes a Node.js client.

Finally, one can simply INSERT data directly into Seafowl.

The Python Seafowl client's dataframe_to_seafowl() function uses the Parquet upload endpoint if the pyarrow Python package is installed.

Otherwise, it issues INSERT queries for each row individually.

Conclusion

Building interactive analytical applications like Open Data Monitor on Seafowl starts with uploading data. Dagster is a powerful tool for creating data pipelines which populate the Seafowl tables queried by users' browsers.

We're looking forward to seeing the incredible analytical applications you build with Seafowl! If you're excited about using Dagster with Seafowl or missing something, let us know!