Splitgraph has been acquired by EDB! Read the blog post.

Deciding if I'm urban with WebAssembly and Seafowl

I used Seafowl to analyze how much of a city slicker I am—with geographic user-defined WASM functions and caffeine addiction!

Measuring Urbanness

Sometimes a hard-to-measure property has significant impact on performance. For example, it's safe to assume sexier lingerie sells better, but devising an algorithm to classify the "sexiness" of an article of clothing based on its design is far from trivial.

Another ill-defined quality impacting countless more measurable metrics is "urbanness", an individual's preference for densely or sparsely populated areas. All sorts of trends, from voting patterns, to life expectancy and religious affiliation all have marked differences in urban versus rural populations.

Statistically, it makes sense to classify someone as urban or rural based on their current residence, regardless of where they may have lived in the past. On an individual level, it's not always so clear-cut. I currently live in a city with a population of over 1.5 million, but I've also lived in a small town (less than 4000 residents). Today, a census would classify me as urban, but is that who I really am?

I decided to find out using photo location data. Smartphones usually encode the longitude and latitude in the EXIF data of photographs. Over the years, I've collected thousands of photos (21808 to be exact), enough to give me a representative sample of where I prefer to spend my time.

Classifying locations

The geometric center of a city is not always its most densely populated spot. What's a better proxy for "urbanness"?

If urban individuals are those who choose to spend their time in the city, areas offering many places to "hang out" are probably ideal locations for spotting urbanites. Conversely, if one frequents such areas, chances are they can be labelled as urban. And what could possibly be a more urban place to hang out than the largest coffeehouse chain in the world, Starbucks?

Following in the footsteps of Golden Arches Theory of Conflict Prevention, let's define the equally tongue in cheek Omnipresent Siren Metric of Urbanness (OSM) as the number of Starbucks locations within 2 kilometers of any given point on the globe.

Conveniently, there's a dataset of Starbucks locations which can be downloaded from Kaggle, which I used to classify my photos by this metric.

Loading photo coordinates

An easy way to extract EXIF metadata from images is to let Google Photos do it for you. For users of Google Photos, Google Takeout offers a way to download photos along with their EXIF data extracted to separate JSON files. Although not covered here, it's also possible to read location data (and much more) directly from images using exiftool.

After downloading and extracting the Google Takeout archives, I ran the following to get a CSV file with the coordinates of each photo:

# enter Google Photos directory within Takeout directory

cd 'Takeout/Google Photos'

# extract image metadata from JSON files

find 'Photos from'* -name '*.json' -exec jq -c '{latitude: .geoData.latitude, longitude: .geoData.longitude, timestamp: .photoTakenTime.timestamp, filename: input_filename}' {} >> unfiltered_image_attributes.ndjson \;

# only keep image metadata with GPS data (non-smartphone cameras don't write location data)

grep -v '{"latitude":0,' unfiltered_image_attributes.ndjson > filtered_image_attributes.ndjson

# write CSV header

echo "latitude,longitude,timestamp,filename" > photo_attributes.csv

# write CSV data

jq -r '([.latitude, .longitude, .timestamp, .filename]) | @csv' < filtered_image_attributes.ndjson >> photo_attributes.csv

Loading data into Seafowl

Seafowl has great CSV support. To see it in action, install Seafowl if you haven't yet, then run:

curl -v -F "data=@starbucks_directory.csv" "http://localhost:8080/upload/public/starbucks_directory"

curl -v -F "data=@photo_attributes.csv" "http://localhost:8080/upload/public/photo_attributes"

Note that I changed the CSV header of the Kaggle Starbucks dataset for more convenient querying to:

brand,store_number,store_name,ownership_type,street_address,city,state,country,post_code,phone_number,timezone,longitude,latitude

Calculating distances

Seafowl doesn't come with built-in functions for calculating the distance between two points on the planet, but fortunately there is an excellent Rust library we can use.

To call it from Seafowl queries, we must create a user-defined function (UDF) and compile it to WebAssembly. The code for the UDF is available on GitHub.

After running the create_udf.sh script, the previously unavailable distance() function may be used in queries

like so:

-- calculate the distance between Berlin and Paris in meters

-- distance(longitude_1, latitude_1, longitude_2, latitude_2) -> distance in meters

SELECT distance(52.5200, 13.4050, 48.8566, 2.3522)

We're ready to start classifying our photo collection by the Omnipresent Siren Metric!

Calculating proximity: the naive approach

A simple way to calculate nearby Starbucks count for each photo is to find the distance to each Starbucks, then filter out distant Starbucks in the WHERE clause:

SELECT

filename,

COUNT(0) AS nearby_starbucks_count

FROM (

SELECT

filename,

photo_lat,

photo_lon,

starbucks_lat,

starbucks_lon,

distance(photo_lat, photo_lon, starbucks_lat, starbucks_lon) as dist

FROM (

SELECT

filename,

latitude as photo_lat,

longitude as photo_lon

FROM photo_attributes

) p

JOIN (

SELECT

latitude as starbucks_lat,

longitude as starbucks_lon

FROM starbucks_directory

) s ON true

) t

WHERE t.dist < 2000 -- distance is in meters, in this case a 2km radius

GROUP BY 1

ORDER BY 2

One of the great advantages of using an SQL database is that we can ask how a query will be executed with the EXPLAIN SELECT ... statement.

The logical plan for this query is:

Sort: nearby_starbucks_count ASC NULLS LAST

Projection: t.filename, COUNT(Int64(0)) AS nearby_starbucks_count

Aggregate: groupBy=[[t.filename]], aggr=[[COUNT(Int64(0))]]

Projection: t.filename, alias=t

Projection: p.filename, alias=t

Filter: distance(p.photo_lat, p.photo_lon, s.starbucks_lat, s.starbucks_lon) < Float64(2000)

CrossJoin:

Projection: p.filename, p.photo_lat, p.photo_lon, alias=p

Projection: photo_attributes.filename, photo_attributes.latitude AS photo_lat, photo_attributes.longitude AS photo_lon, alias=p

TableScan: photo_attributes projection=[filename, latitude, longitude]

Projection: s.starbucks_lat, s.starbucks_lon, alias=s

Projection: starbucks_directory.latitude AS starbucks_lat, starbucks_directory.longitude AS starbucks_lon, alias=s

TableScan: starbucks_directory projection=[latitude, longitude]

Notice the CrossJoin (also called Cartesian product)! It's the type of join between two tables where every row in table A is joined with every row in table B.

The resulting table will have a total row count of rows in A * rows in B.

The distance() function is pretty fast: a single call returns in 5-6 microseconds on my laptop. Even so, calculating all 25600 * 21808 = 558284800 distances took me about an hour.

Can we do better?

Calculating proximity: using pre-filtered pairs

Calculating the precise distance between two points on a globe is more complex than on a plane. Still, we can use a rough heuristic to filter out photo - Starbucks pairs which are obviously much farther away than 2 km. At the equator, one degree latitude / longitude corresponds to 111km. I've never been so close to the poles that 2 degrees would be less than 2km apart, so any Starbucks more than two degrees latitude or longitude away from the photo's location will surely be out of the 2km range.

Pre-filtering pairs

First, I created a table of candidate pairs based on differences in latitude and longitude:

CREATE TABLE filtered_pairs_less_than_2_degrees_apart AS WITH

all_pairings AS (

SELECT

filename,

photo_lat,

photo_lon,

starbucks_lat,

starbucks_lon,

ABS(photo_lat - starbucks_lat) AS diff_lat,

ABS(photo_lon - starbucks_lon) AS diff_lon

FROM (

SELECT

filename,

photo_lat,

photo_lon,

starbucks_lat,

starbucks_lon

FROM (

SELECT

filename,

latitude as photo_lat,

longitude as photo_lon

FROM photo_attributes

) p

JOIN (

SELECT

latitude as starbucks_lat,

longitude as starbucks_lon

FROM starbucks_directory

) s ON true) r

)

SELECT *

FROM all_pairings

-- A difference of 2 degrees or more latitude or longitude will definitely

-- result in more than 2 kilometers between the points in the range we're

-- working with.

WHERE diff_lat < 2.0 AND diff_lon < 2.0;

The filtered_pairs_less_than_2_degrees_apart table has 687911 rows, less than 1% of the rows resulting from the cross join in the previous query.

Calculating the distance of candidate pairs

The following query filters the pairs further based on the precise distance:

CREATE TABLE filtered_pairs_less_than_2_km_apart AS SELECT * FROM (

SELECT

filename,

photo_lat,

photo_lon,

starbucks_lat,

starbucks_lon,

distance(photo_lat, photo_lon, starbucks_lat, starbucks_lon) as dist

FROM filtered_pairs_less_than_2_degrees_apart) t

-- Filter the list according to the actual distance in meters, so

-- < 2000 results in a 2km radius.

WHERE t.dist < 2000;

This second filter throws out over 94% of the rows in the filtered_pairs_less_than_2_degrees_apart table, leaving only

Starbucks locations within the 2km radius of each photograph.

The filtered_pairs_less_than_2_km_apart table contains 39715 rows, the same number we got with the original hour-long calculation, but the two

CREATE TABLE queries take a total of 15.1 seconds, less than 1% of the original runtime.

My most urban photograph

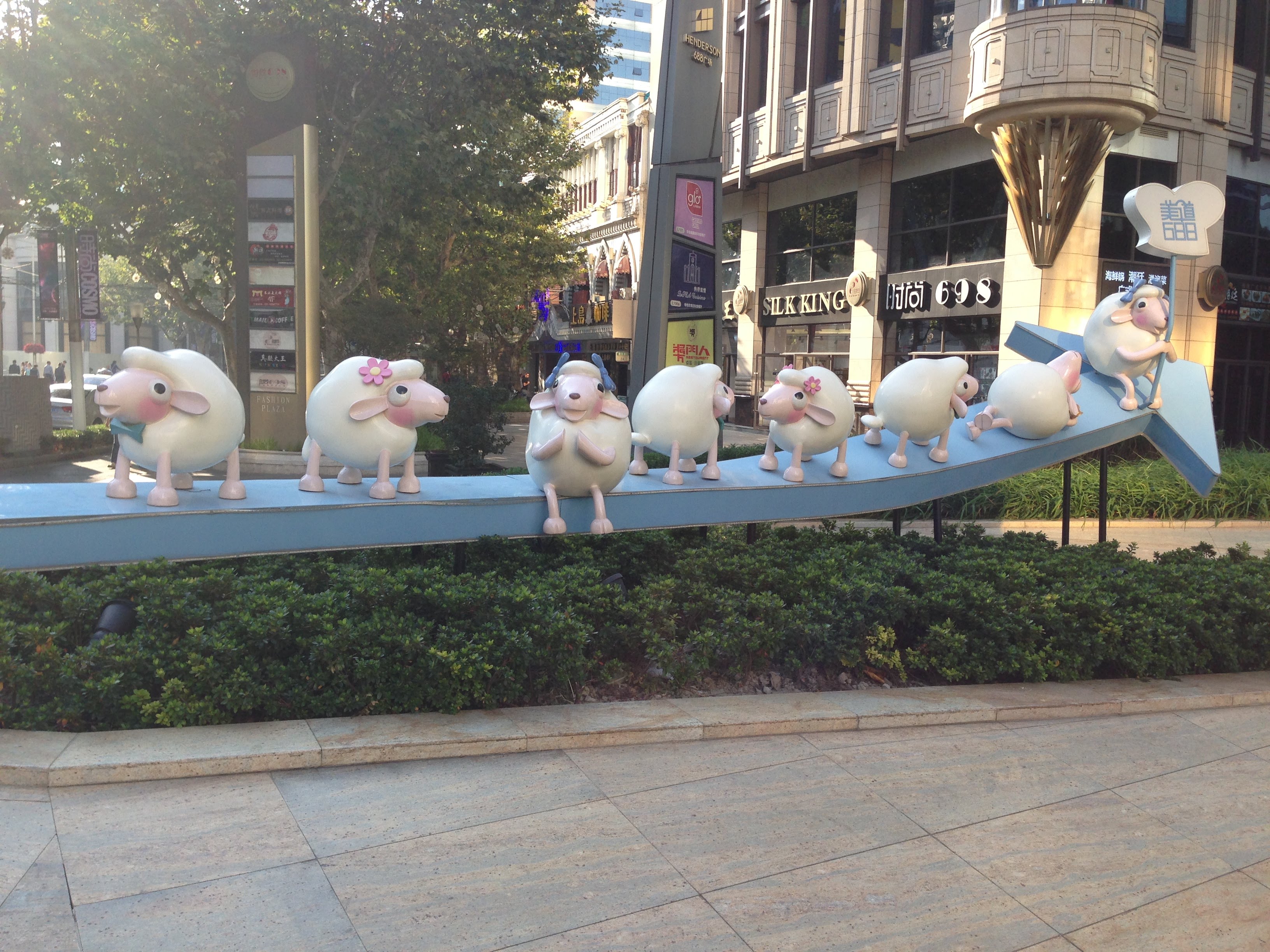

There's a clear winner among the 21808 contestants for "most urban" photo, with an OSM of 68:

Directing the Starbucks Store Locator to the coordinates where the photo was captured helps explain why. Considering Shanghai has the most Starbucks locations of any city on Earth, more than double that of Seoul at second place, it's not surprising that my "winning" photo was taken there.

How urban am I

To find out, I needed the number of photographs for each OSM score:

-- query.sql

SELECT

nearby_starbucks_count,

COUNT(0) as num_photos

FROM (

SELECT

filename,

COUNT(0) AS nearby_starbucks_count

FROM filtered_pairs_less_than_2_km_apart

GROUP BY 1

) t

GROUP BY 1

ORDER BY 1 ASC;

I used the following bash command to get the results of the query as CSV with the help of jq:

curl -H "Content-Type: application/json" http://localhost:8080/q -d@- << END |

{"query": $(cat "query.sql" | jq -Rsa .)}

END

jq -r '[.nearby_starbucks_count, .num_photos] | @csv' > photos_by_osm.csv

Using the Node.js Seafowl client is also an option:

seafowl/examples/clients/node/seafowl-client.js -f filtered_step3_simplified_osm_histogram.sql | tail -n +2 | jq -r '.[] | [.nearby_starbucks_count, .num_photos]' | @csv > photos_by_osm.csv'

With most of my photos having an OSM of 0, the answer seems to be that I'm not very urban, especially when compared to someone who feels at home in Shanghai:

Urbanness over time

If "urbanness" is an innate quality unlikely to change much once our adult personality has developed, any reliable indicator should be pretty stable over time. Looking at the number of photos I've taken with OSM ≥ 1 each month makes it seem like that is indeed the case.

The exception is a trip to Shanghai in October of 2015 when I took the picture with the plastic sheep shown above.

Conclusion

It was fun analyzing where I've taken photos over the last decade. Seafowl has been a convenient tool throughout the process:

- CSV import made it easy to work with data from Kaggle and Google Photos.

- We can easily extend Seafowl's builtin functions with our own UDFs.

- JSON output is convenient in most cases and can easily be converted to CSV using

jqwhen necessary.

I look forward to all the cool stuff people build with this fast new database!